Introduction to Server Performance

In the current technology-focused world, servers are becoming central in the facilitation of large volumes of information processing and storage that are demanded by companies. Their performance has direct effect on such aspects as application responsiveness and the overall system uptime. Although the hardware has continually been challenged to the point of exceeding the processing capabilities, it is imperative to note that the raw performance on its own does not ensure optimal operation.

The effective work of the server is based on the effective combination of several factors, and two of the most important of them are thermal management and CPU capabilities. The CPU of a server processes challenging computational operations; hence it is a basis of performance.

Nevertheless, the amount of heat formed during the operation is also a serious problem that requires consideration. Servers with constant thermal load are in a greater risk of hardware failure which disrupts workflows and results in expensive repairs.

Modern servers are configured to be balanced in power and efficiency, although the balance may be fragile. To obtain high performance and reliability, one should learn how to interact processing requirements and thermal output of a server and create the surrounding environment to satisfy the requirements. The companies can ensure that servers will work to the desired level without compromising the operational stability by identifying and removing potential bottlenecks.

Understanding Thermal Balance

Thermal balance in servers is used to stabilize the heat levels to ensure the servers are under normal operation and high-intensity parts are not damaged by heat. Servers during their work performance produce a lot of heat, which should be prevented through efficient dissipation to avoid the decrease of the performance and the responsibility of hardware. Effective cooling mechanisms, such as fans, heatsinks and superior technologies, will be important in regulating this heat production.

Among the key issues that poor thermal balance can cause is the fact that it may hasten the wear of the components. The chronic extreme heat condition can cause a strain in the sensitive hardware resulting in their breakdowns that interrupt the business operations.

Moreover, too much heat could affect the energy use, since more cooling would be needed to overcome the rising temperature thus raising energy usage and operating expenses.

The environmental factors are also to be considered when maintaining thermal balance. Inadequate ventilation and improper location of the server or lack of spacing can be a contributory factor to heat accumulation and thus it becomes more difficult to maintain the optimum temperatures.

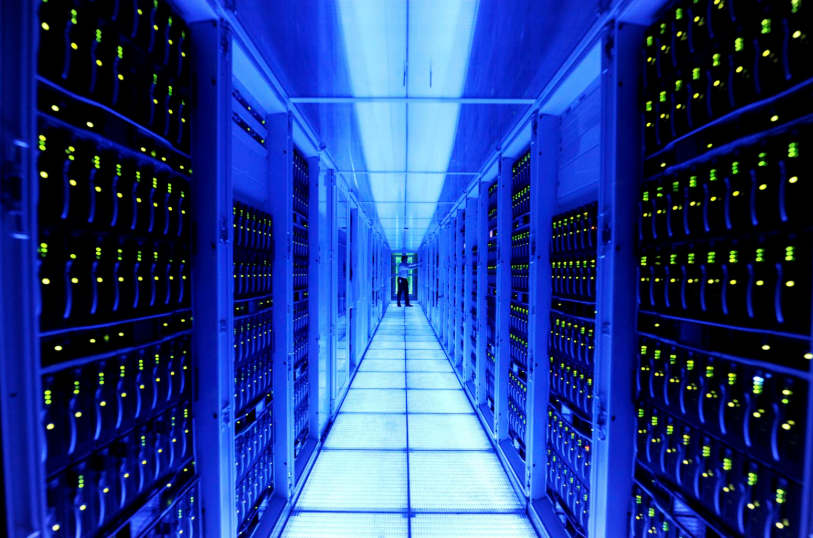

In the case of data centers, this balance would be achieved through the well-designed infrastructure that facilitates airflow and eliminates hot spots, which could compromise stability of servers.

By putting emphasis on heat management among other performance considerations such organizations have the interest of establishing an environment that ensures reliable server operation even during heavy work loads. Thermal management techniques are necessary to maintain the performance of servers and prevent the needless downtimes or maintenance.

CPU Power Considerations

The ability of a server to handle complex workloads and address the needs of contemporary application is determined by its CPU power. CPU performance of a server is a decisive factor of how well they can perform complex computations, data processing at high speed, and multitasking with the lowest latency. This renders the choice of the appropriate CPU specifications to be a key task in developing or updating server environments.

Along with the increasing demand of faster data processing, faster CPUs are becoming common in servers. The more powerful processors are however associated with increased energy consumption and heat generation. In absence of proper cooling mechanisms, this extra heat may put pressure on the internal parts of the server and cause thermal inefficiencies.

Not only does overheating affect the lifespan of hardware, but can also slow the processing speed since the servers have a throttling mechanism to stop the destruction of the hardware.

Although you need to select CPUs that are compatible with your operational requirements without passing thermal design limits, this is crucial in ensuring that the server is well-functioning.

Although the idea of using multiple cores with high clock speeds in processors is an impressive concept with regard to computational capabilities, the processors need to be used together with cooling mechanisms that can handle the thermal output of the processors. Proper planning will also make sure that the performance of the servers is increased without causing any problems due to the temperature.

The choice of the CPU must also take into consideration workload specifications. As an example, a server that is performing virtualization or machine learning operations might need to have a greater number of cores, whereas one that is doing database management work might need to run one thread more rapidly. This is important in balancing these needs against heat generation and energy consumption, thus long-term efficiency in the operations.

Finally, it is necessary to periodically monitor the performance and condition of server CPUs. With the changes in technology, newer processors might have a better power-performance ratio, which will ease the workload on cooling equipment and still give the required upgrades. The ability to manage CPU power by observing it keenly and incorporating the right cooling systems enables businesses to achieve the extent of performance without interfering with the stability of the system.

Comparing Thermal Balance and CPU Power

Thermal balance and CPU power are the important performance indicators that determine the performance of the servers, but cannot be not related. The faster powered CPUs i.e. multiple cores or high-speed CPUs can better execute strenuous and demanding workload efficiently. However, this increased processing power also tends to be expensive in that increased heat is generated and thus needs to have a powerful cooling device to prevent temperature build-up.

A failure of a cooling infrastructure within a server to sufficiently handle the amount of heat generated by high-performance CPUs may cause a condition known as thermal throttling, in which the processor will slow down to prevent overheating.

Although this security precaution is essential in safeguarding hardware, it has a direct negative effect on server performance and reduces the benefit of a high CPU power. Moreover, overheating of other internal aspects may wear off the internal components over time, which will increase the risk of the system-wide inefficiencies or breakdowns.

The trade-off between the performance requirements of the CPU and the ability to manage the heat dissipation of the CPU is to measure the thermal design power (TDP) of processors and make sure that the server can support the heat dissipation needs. There should also be some workload factors within this balance since servers with workload that is application-intensive, data analytics or AI workloads, have a higher likelihood to exceed thermal limits.

Organizations should also be conscious of the impact that the environmental conditions of the room temperature and airflow can have on the thermal efficiency. Regardless of the sophistication of the processors and the effectiveness of the cooling mechanisms, external influences such as improper ventilation or improper spacing of the hardware may destroy the thermal control. This brings us to the fact that we have to think in terms of a holistic approach to designing server setups; that is, both thermal conditions and CPU capabilities are considered simultaneously.

Strategies for Achieving Thermal Balance

In order to reach thermal equilibrium, it is necessary to pay attention to the effective cooling and the appropriate environment. Begin with correct installation of servers in a setting that enables dissipation of heat. Install equipment to position hardware to ensure a clearance between units allowing circulation of air and eliminating overheating due to tight-deck setups.

The design of the hooks used to support the rack can be used to consider ventilation and airflow patterns to significantly influence the warehouse temperature levels.

Another working strategy is the provision of advanced cooling systems. An example of this is the use of liquid cooling solutions which provide better heat regulation than the conventional air-based cooling mechanisms.

These systems are capable of increased thermal loads, and are especially applicable to high-performance CPU servers that heat up. When used in conjunction with appropriate humidity controls, air conditioning units with data center use can also assist in controlling ambient temperature.

Thermal management systems need to be maintained on a regular basis. Clean dust and debris off server components, fans and filters to have a continuous airflow. The presence of blockages in cooling equipment may soon result in ineffective heat dissipation that may cause an extra strain on the servers and cooling systems.

Install monitoring software to monitor the temperature of servers and cooling output directly. These systems may provide the data regarding potential areas of issues and make immediate actions in case the temperatures are too high to be considered safe.

The automated alerts may be employed to notify the teams about the temperature changes in order to minimize the risk of unforeseen downtime or equipment destruction.

The thermal design power (TDP) of components used in designing or upgrading a server setup need to be considered. Choose equipment that is in line with your cooling capacity and not that which will overtax thermal limits. With proper planning hardware will be used in safe temperature ranges and bottlenecks in performance can be avoided or system degradation minimized.

Thermal balance is also provided by the environmental factors not within the server racks. Make sure to open the server room to proper air flow either with a raised floor or cold aisle containment or other arrangements to ensure there is no accumulation of heat and therefore the cooling is optimum.

Conclusion

Ensuring performance of the server is a delicate balance that must also be achieved with regard to computation and efficient heat management. The CPUs of high performance provide the processing capacity required by the modern loads, and the full benefits of these capabilities can be affected by the neglect of thermal conditions.

Overheating is a serious issue because it can cause damage to hardware, but it also affects energy efficiency and cost of operation, and therefore should be given serious consideration in choosing the CPU, in addition to heat management.

The trick to attaining a stable server performance is to have a system that matches the performance needs with the sufficient cooling infrastructures. This means selecting parts that can be used in the thermal constraints within your systems and that cooling mechanisms are capable of handling the amount of heat within peak working conditions.

These efforts are also supported by periodic maintenance and environmental adaptation and the application of real-time monitoring tools, which detect possible problems at an early stage.

Finally, good thermal balance is not just about hardware protection, but is key to ensuring that there is always a consistent performance and preventing expensive interruptions. Businesses can achieve efficient processing environments, including those that enable operational effectiveness as well as long-term viability, by investing in heat management strategies, which meet the processing requirements.

If you’re ready for a hosting environment where thermal balance and CPU performance work together for maximum speed and reliability, upgrade to an OffshoreDedi offshore hosting and experience smoother workloads today.