These issues include redundancy of the database as one of the typical problems of data management that might result in critical negative impacts on enterprise operations. The issue of data redundancy in a scenario where multiple databases are used is that it is a waste of resources, costs more space to store, and results in inconsistent data. Hence, there arises the need for organizations to seek to find out why they have to have duplicate databases and what implications result from having so in a bid to make no compromise on their efficiency.

Inefficient Database Design Leading to Redundancy

A significant culprit behind database redundancy is inefficient database design. Poorly normalized databases can result in the same data being stored in multiple locations, creating unnecessary data replication. This often occurs when no explicit schema or structure guides data organization within the database. For instance, having a customer’s contact information spread across several tables for different purposes without a unifying key or reference can generate multiple instances of the same data.

This not only wastes the available storage space but also makes the data management process more manageable. To minimize this, databases should be developed by observing the data dependencies and needs of a specific database. These normalization principles make arranging data and eradicating redundancy and other pitfalls possible. Since each information is stored only once and the rest of the reference is made through keys, databases can be made much more efficient.

This approach doesn’t just save precious space for storage. It organizes and retrieves information, an essential factor for sound data management practices to avoid the creation of multiple copies of data.

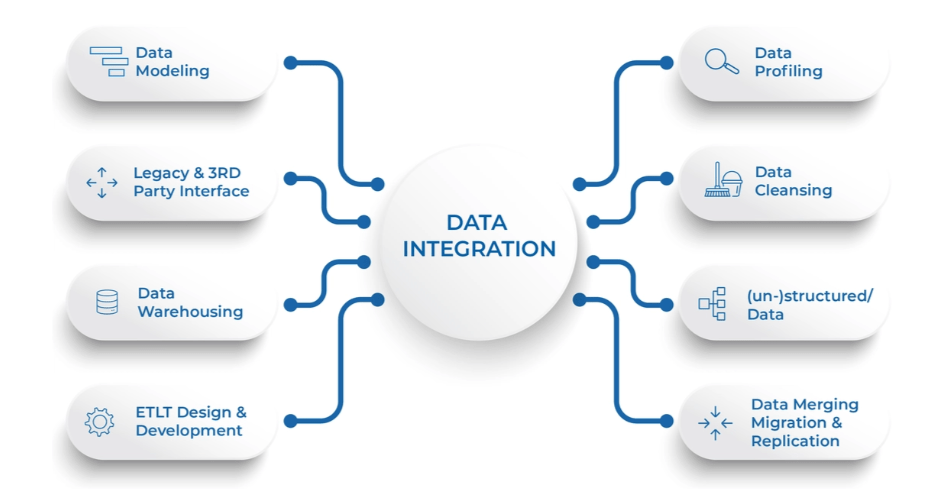

Lack of Proper Data Integration Across Systems

One pivotal factor contributing to database redundancy is inadequate data integration across various systems. In scenarios where databases and applications operate in silos, without a cohesive mechanism to ensure data is uniformly synchronized, the likelihood of generating duplicate records skyrockets. This fragmentation leads to the proliferation of inconsistent datasets across platforms, complicating maintaining a single source of truth.

The essence of robust data integration lies in the seamless flow of information, where updates in one system are instantaneously reflected across all others. With this synchronization, efforts to consolidate data can be manageable, often resulting in the accumulation of redundant data. It is thus essential to point out that organizations wishing to reconcile these gaps can only do so with the help of developed data integration tools and technologies.

These solutions make it possible for data integration where new data is passed as a data mine in real-time meaning that such incidences are reduced to the minimum. Al also, one might think that if some information has been consolidated, then the goals of the data management plan must help in centralizing the sources of data to ensure that all systems use a standard form of data. In this way, organizations can have a more harmonic, rapid, and accurate manner of working with their data, thus reducing the risks of having inefficient data systems.

Human Error in Data Entry and Management

Human error plays a significant role in contributing to database redundancy. Mistakes made during the data entry, such as inputting the same information more than once or incorrectly across different databases, are common pitfalls. These errors can result in the creation of redundant records that not only bloat the database but also undermine its integrity. Additionally, data mismanagement, where updates are not consistently applied across all documents, further exacerbates the issue.

Such challenges should be faced by organizations keen on implementing data validation modalities. It assists in checking whether the entered data meets certain standards of correctness before reaching the database. Also included are educating programs that seek to enhance the understanding of the staff about the importance of entering correct data into the organization’s processes.

Such educational programs should focus on the impact of redundancy and on how to correctly attain high levels of data consistency. Moreover, the automation of data entry enables the reduction of the margin of error because data entry will conform and be accurate across the various platforms. With these coordinated strategies and plans, organizations can minimize human-induced redundant data input by many folds to enhance the accuracy and efficiency of the data input & management.

Consequences of Increased Storage Costs Due to Redundancy

The financial ramifications of database redundancy are profound, primarily manifesting as escalated storage expenses. Because of this, there is a constant need to store the redundant copies of the data and this in turn means that organizations will have to acquire new disks or more disk storage space to cater to this need. This is not only true in terms of operations but it also taps into the notion that the organization’s resources are being spread out, oftentimes less effectively, than if they were concentrated on other presumably more valuable alliances.

They are not even restricted to capital expenditure; with a vast system of storage, one has to expend more effort in the form of management and complex coordination procedures. Such aspects can add complexity to data handling and, therefore, take time to retrieve and analyze accuracies, thus amplifying the costs.

Besides, environmental degradation increases as more room for data storage is created since the power required to store data and cool the computer systems consumes a lot of energy. These increased storage costs can pressure an organization’s financial, operational, and environmental mental performance. Addressing database redundancy is not just a data management issue but a strategic financial necessity to optimize resource allocation and maintain competitive advantage in the digital era.

Data Inconsistency and Its Impact on Business Operations

Data inconsistency, a direct result of database redundancy, presents significant problems when running a business. Such a situation would result in inefficient strategic business decision-making since conflicting information results in erroneous data analytics. For instance, forecasting for sales and inventory management can be highly inaccurate, resulting in problems such as overstocking or understocking, loss of sales, and, in turn, customer dissatisfaction.

Significant negative impacts may include risk management, legal and compliance issues, and those related to timely and accurate information, especially in financial services and healthcare. Moreover, random data presents challenges when implementing marketing strategies, making it more difficult to achieve the best returns on investment as most of it might need to be corrected. The operational cost losses resulting from these inefficiencies work in a wave-like basis that undermines competitive advantage since markets evolve with different dynamics.

In addition, activities for data preparation and their combination can be significantly time- and cost-intensive, and may thus become a future focus of attention of an organization. This scenario reflects the need to operationalize effective archival data management for the primary goal of achieving data synchronization; whereby the decision maker is supplied with the right data at the right time. When the problems of database redundancy are not solved at their origin, it is as if companies are operating in a sea of uncertainty, which does not favor growth and innovation.

Complications in Data Backup and Recovery Processes

The issue of database redundancy extends into the realm of data backup and recovery, introducing several complications that can hinder an organization’s ability to safeguard its data effectively. With redundant data scattered across multiple databases, pinpointing which datasets need to be backed up becomes a complex task.

This complexity not only increases the time and resources required for backup operations but also elevates the risk of overlooking crucial data, potentially leaving gaps in the backup strategy. Furthermore, in data recovery, redundancy complicates identifying and restoring the most current and accurate data version.

Organizations may find themselves sifting through multiple versions of the same data, trying to discern which one is correct or most recent—a task that can be time-consuming and prone to errors. This becomes worse during emergencies that require the quick restoration of data since the time spent hunting for redundant data can prolong emergency response.

Furthermore, the proliferation of redundant data that needs to be backed up due to redundancy increases the amount of storage required for backup facilities, leading to the cost inflation of backup redundancies. All these require an efficient approach to managing data where there is minimum duplication, thus less backup and recovery time on the data, and where necessary, there is protection of important data. Implementing such measures not only enhances the reliability of backup and recovery processes but also enhances the general system and structure of data systems in organizations.

Strategies for Reducing Database Redundancy

Therefore, to effectively address this problem of redundancy of databases by organizations, they will have to employ a systemic solution which in this case targets the achievement of organizational and systematic improvements in work. One of them relates to paying a lot of attention to the existing ones to eliminate several redundant records in the database.

With the current process, in addition to identifying redundancy problems in the process as well as other systematic problems that lead to data redundancy. Moreover, getting and utilizing the higher level of data integration solutions also play important role in integration of multiple systems. Such tools enable the manipulation of the flow and synch of data processes as this reduces the gap of data duplication.

Another best practice involves the use of proper data governance processes in the organization. Frameworks of this nature offer specific guidelines relating to the handling of data and are employed in order to bring about order, conformity, and compatibility to data within an organization across multiple structural tiers. This entails setting up specific data input, amendment, or deletion guidelines to reduce human mistakes.

In addition, organizations should also think of using sophisticated data deduplication systems. These technologies have the purpose of search and deletion of exact or nearly similar patterns of identifying recurring data, hence improving the storage space and ease of retrieving the data.

This implies that through applying of these strategies organizations would provide a clear, improved, effective and cost-effective means of managing that data. This kind of approach does not only address issues to do with proliferation of databases but also offers a long-term solution for handling future instances of data; hence increasing the overall operational efficiency of the organization.