It is crucial to know the basics of CloudLinux and how resource limits work to make the functioning of the Web space as comfortable and stable as possible. When you set strict preferable limits for CPU, memory, I/O, and entry processes, you can guarantee that no site or web application that you host on your server will hog the resources from other sites. In the course of this blog post, the details of the key resource limits accompanied by the practical tactic on how to work with them in CloudLinux will be discussed.

Introduction to CloudLinux and Its Importance in Web Hosting

CloudLinux can be considered one of the most outstanding solutions within the web hosting context because of its approach to resource division and user segregation. Having fair coverage of the server resources among the numerous accounts in the context of shared hosting is quite a daunting task as it has to be done in parallel to the high performance and availability rates.

CloudLinux goes straight to the heart of this problem by placing each user into a pod designed as a Lightweight Virtual Environment (LVE). This approach enables the hosting provider to match the resources with the account(s) they serve and this comes with an added advantage that none of the accounts will hinder the performance of the other.

Just as for web hosting, CloudLinux is not only about the management of resources: The main function of its security elements is to ensure protection from threats that are often inherent to the shared hosting model when one account can affect the others. Through compartmentalization of users, CloudLinux minimizes this risk and at the same time improves the security status of the server.

However, since CloudLinux allows you to set quotas on nearly every aspect it is a most appropriate solution for hosting providers who want to provide their clients with various hosting plans. This flexibility makes it possible to allocate resources depending on the particular requirements of given users, ranging from a simple personal blog up to more resource-consuming e-shops, which provides extra evidence of CloudLinux’s role in the formation of a comprehensive and effective web hosting environment.

Key Resource Limits in CloudLinux: An Overview

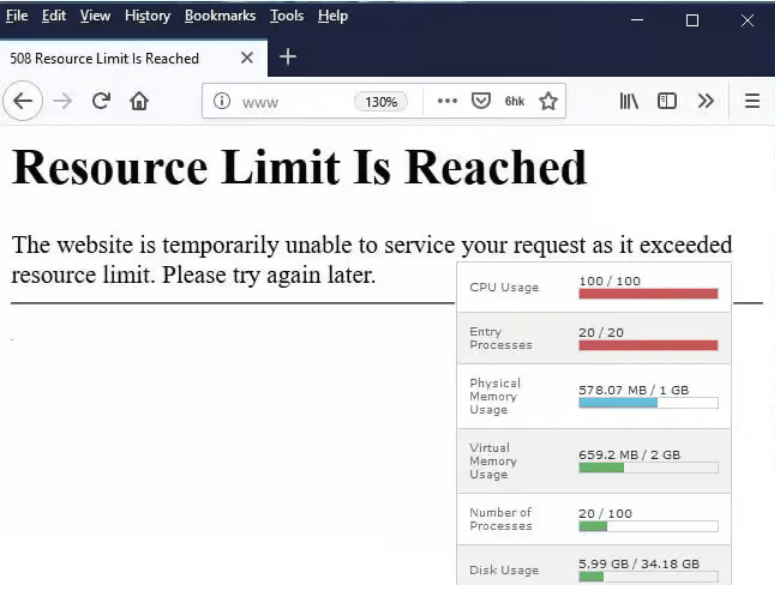

In CloudLinux, the cornerstone of creating a balanced and fair web hosting environment lies in the ability to precisely manage resource limits. These limits encompass CPU, memory, I/O, and entry processes, each serving a vital role in the preservation of server health and user satisfaction.

CPU limits are designed to cap the processing power available to each account, ensuring no single user can monopolize this critical resource. Memory limits are set to allocate a specific amount of RAM to users, preventing any single process from depleting available memory to the detriment of others.

I/O limits act as the efficient regulators of the rate at which data is read from and written to disk to prevent I/O constraints which are enemies of server performance. Lastly, there is the entry process limit that defines the number of connections that the user can open at once in an attempt to avoid flooding the server with many simultaneous connections.

Combined, these resource restraints constitute the principal framework of CloudLinux’s capability to offer a solid, effective, and fairly shared hosting environment. These parameters, therefore, have to be very well managed to achieve a balance whereby the hosting provider ensures that the server is seamlessly optimized to be able to meet the needs of users across the wide and diverse web hosting spectrum.

Understanding CPU Limits and Their Impact on Server Performance

CPU limits in CloudLinux are one of the most important elements of the web host to manage the presence of the server and divide its performance between users. These limits prevent any single account from consuming more than its allotted share of CPU resources, which could otherwise lead to diminished service quality for other users sharing the same server. The implementation of these controls brings about the sharing of processing in a rational manner and this is a sure way of ensuring that the servers run as they are supposed to to meet the user expectations.

Effectively managing CPU limits requires a careful analysis of user needs and server capacity. It consists of defining the maximum percentage of the computational capacity that every user can use to avoid the concentration of processing resources. It is especially effective when applied to shared hosting services where many users are connected with the help of only one server for the execution of their Websites and applications.

When CPU limits are properly configured, users experience consistent website performance, even during peak traffic times. This is because CloudLinux dynamically allocates CPU resources to where they’re needed most, ensuring that no single user’s activities can impact the overall server stability. Hosting providers must regularly review and adjust these limits based on current server load and user demands to maintain optimal server performance and to keep all hosted sites running smoothly.

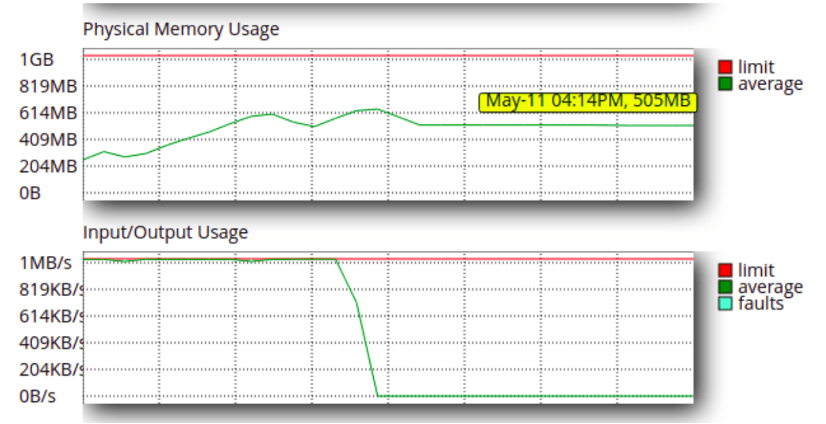

Memory Limits in CloudLinux: Ensuring Efficient Resource Usage

Memory limits within CloudLinux play a pivotal role in managing the allocation of RAM across users in a shared hosting environment. In this way, hosting providers can set a cap on allowable amounts of memory each account can use to reduce the occurrence of a process that takes up all of the available memory on the server, and as a result, may cause certain other users to experience temporary loss of service.

Setting up these limits is vital in as much as it creates a fair share of the utilization of the memory in which every website or application hosted in the server will enjoy a fair share of the memory to run its operations without infringing on the rights of the other web sites or applications.

Memory allocation or the ‘use and control’ of the above setups call for a typical level of understanding of the rigor that usual applications or websites present to the server. It involves not just setting a hard cap on memory usage but also allocating memory in a manner that aligns with the performance needs of each user, while still preserving a buffer to accommodate spikes in demand.

Doing so strategically also ensures that the probability of memory being the limitation does not occur as the other servers make the overall performance of the server better and the experience of users enhanced. Importantly, oversight of these limitations and changes according to the use identity and server’s performance is crucial to having proper, healthy, and stable hosting.

Managing I/O Limits for Optimal Disk Performance

In CloudLinux, setting appropriate I/O limits is a critical factor in ensuring that disk operations do not become a bottleneck for server performance. These are limits necessary to avoid a situation where a few users may be performing numerous read and write operations on a disk to the exclusion of others and this causes the disk to become slow.

The fundamental strategy is to know the usual disk I/O consumption of the hosted sites and apps and formulate I/O limit rules that will not erode the health of the disks while allowing excessive use by the applications that need it. This involves not just imposing a cap on the amount of I/O operations per user but also involves dynamically adjusting these limits in real-time based on current disk usage and server load.

They do this in a way that tends to prevent disk access from being monopolized by one or more users at the le of responsiveness and reliability of the server. Besides, the constant usage control by tools for I/O helps hosting providers make the proper decisions about the variation of limits depending on the utilization of the server. This strategic management of I/O limits is essential for sustaining optimal disk performance, thereby enhancing the user experience across the board.

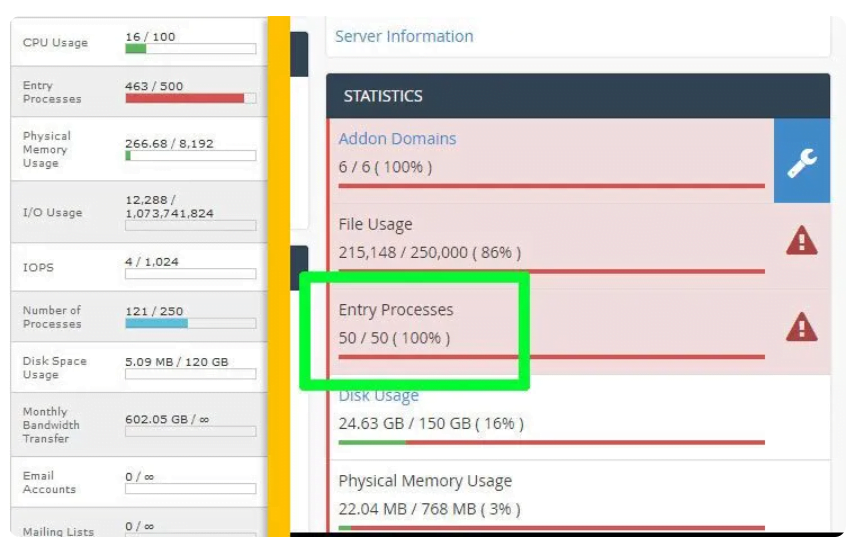

Entry Processes: Controlling Concurrent Connections

That is why entry processes are an equally important part of CloudLinux resource management tools: the number of connections to the server simultaneously resolves around entry processes. These limits are very important to help avoid the situation when the user sends more requests at once than the server can handle for other users starves them for resources and makes the overall server performance lower.

In managing entry processes, the hosting providers must tread this line very carefully, while ensuring that each account gets a sufficient number of connections to allow its legitimate traffic, but not get the server messed up with several times the necessary connections.

This means understanding normal user traffic and programming restraint measures that enable dealing with the surges of traffic while not allowing users to take too much of the server resources. Making further fine-tuning of these limits depending on the actual load on the server and the effectiveness of specific accounts can also enhance the issue of designing the balance between ease of use and restrictions.

Successful control of entry processes prevents the occurrence of fluctuations in the workload of the server resources used by all the users needed to run websites and applications without interruptions.

Practical Tips for Configuring CloudLinux Resource Limits

When it comes to the management of CloudLinux resource limits, a more or less universal perfect strategy is impossible; that is why it is pivotal to adapt it according to the load requirements and user activity. One of the key types of maintenance: monitoring the utilization of servers and the number of resources they use regularly will help to notice that some changes are required as the potentials can be either unused or exhausted.

Consult with the users of your resources to know their needs and expectations as to how resources should be used. This insight gives a better frame on how to set limits and control to avoid achieving a trade-off between performance and efficiency. Utilize CloudLinux’s tools and reports to track resource consumption trends over time, which can inform more data-driven adjustments to limits.

When configuring limits, aim for a balance that supports the majority of your users’ needs without compromising server stability. Last but not least; follow-up and instructing your users regarding resource conservation can result in the creation of lighter websites and applications that will not exert more pressure on servers. Implementing these strategies will contribute to a more harmonious server environment, where resources are utilized judiciously, and all hosted sites can perform optimally.